Topic Modelling: A Deep Dive into BERTopic

Unveiling the Power of Topic Modelling

Introduction

In the era of information overload, it becomes increasingly challenging to derive meaningful insights from vast amounts of unstructured data. Thankfully, topic modeling techniques provide an effective solution to analyze and categorize text data. In this article, we will explore the concept of topic modeling, its significance, and dive into the BERTopic package, a powerful Python library that leverages the groundbreaking BERT (Bidirectional Encoder Representations from Transformers) model for topic extraction.

Understanding Topic Modelling

Topic modeling is a technique used to uncover latent thematic structures within a collection of documents. It aims to discover the underlying topics or themes that pervade the dataset, allowing us to organize, summarize, and gain insights from large text corpora. Topic modeling algorithms employ statistical models, such as Latent Dirichlet Allocation (LDA) or Non-Negative Matrix Factorization (NMF), to assign topics to individual documents based on the distribution of words within the corpus.

BERTopic

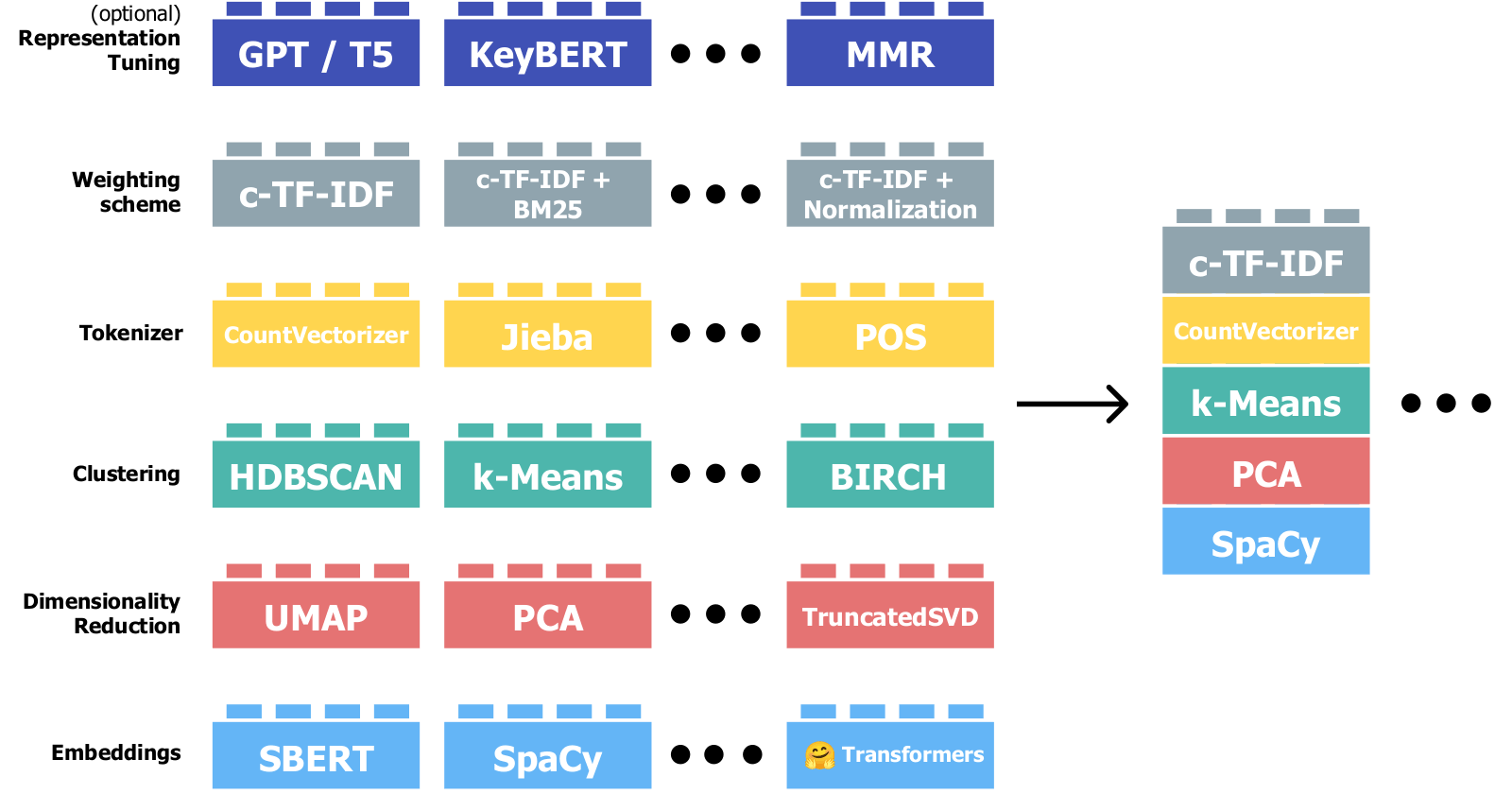

BERTopic is a cutting-edge Python package developed by Maarten Grootendorst, designed to provide advanced topic modeling capabilities using BERT embeddings. BERTopic leverages the power of transformers, specifically the BERT model, to generate high-quality document embeddings that capture both the context and meaning of words, leading to superior topic representations.

Installing BERTopic

To install the BERTopic package, you can use the following pip command:

pip install bertopic

Exploring BERTopic Features: BERTopic offers a range of features to facilitate topic modeling, including:

Text preprocessing: BERTopic provides built-in text cleaning and preprocessing functions to ensure optimal topic extraction.

BERTopic model creation: The library enables the creation of a BERTopic model, which automatically generates document embeddings using the BERT model.

Topic visualization: BERTopic allows visualizing topics and their relationships through interactive visualizations.

Advanced customization: The package offers numerous customization options to fine-tune topic modeling, such as selecting the number of topics and merging or reducing topics.

Implementing BERTopic

Now, let's dive into the implementation of BERTopic.

Text Preprocessing

To prepare our text data for topic modeling, we need to preprocess it. BERTopic provides a straightforward way to preprocess text using the preprocessing module. Some common preprocessing steps include lowercasing, removing stopwords, and lemmatizing.

from bertopic import preprocessing

docs = [...] # List of documents

processed_docs = preprocessing(docs)

Creating BERTopic Model

With the preprocessed documents, we can now create our BERTopic model. The create_model method generates the document embeddings using the BERT model.

from bertopic import BERTopic

model = BERTopic()

topics, _ = model.fit_transform(processed_docs)

Visualizing Topics

To gain insights from the generated topics, BERTopic offers powerful visualization capabilities. We can visualize the topics using the visualize_topics method, which creates an interactive plot displaying topic distribution and related keywords.

model.visualize_topics()

Advanced Customization with BERTopic

BERTopic provides several advanced customization options to improve topic modeling results:

Choosing Optimal Parameters: BERTopic allows us to fine-tune the number of topics generated using the n_topics parameter. Experimenting with different values can help us find the optimal number of topics for our dataset.

model = BERTopic(n_topics=10) # Specifying the number of topics

Topic Reduction and Merging: In some cases, we might want to merge or reduce similar topics. BERTopic offers methods such as reduce_topics and merge_topics to achieve this, ensuring more meaningful and concise topic representations.

model.reduce_topics(docs, topics)

model.merge_topics(topics, threshold=0.7)

Conclusion

Topic modeling is a powerful technique that allows us to uncover the latent themes and structures within text data. The BERTopic package leverages the power of the BERT model to provide state-of-the-art topic modeling capabilities. With its straightforward implementation and advanced customization options, BERTopic enables users to extract meaningful insights and unlock the hidden potential of their text data.

To explore further, read the BERTopic documentation and the original research paper.